| Technical Name | TAIMTAQ: A High-Performance Transformer Accelerator Chip for Edge Computing | ||

|---|---|---|---|

| Project Operator | Institute of Electronics, National Yang Ming Chiao Tung University | ||

| Project Host | 黃俊達 | ||

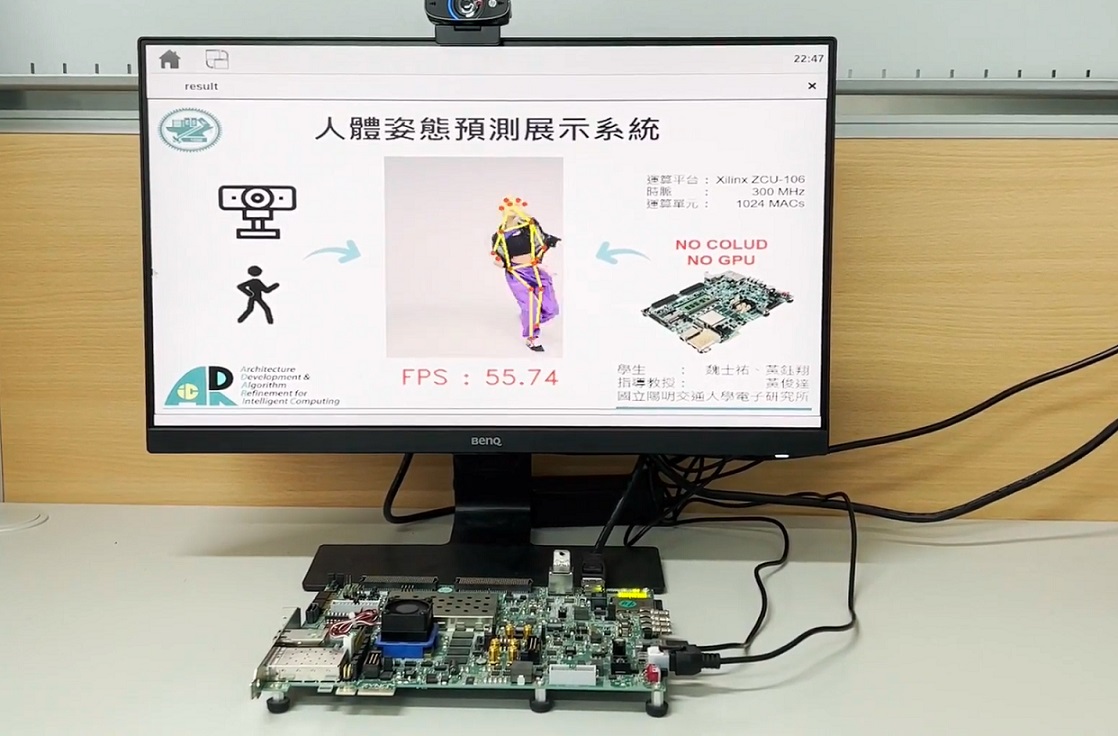

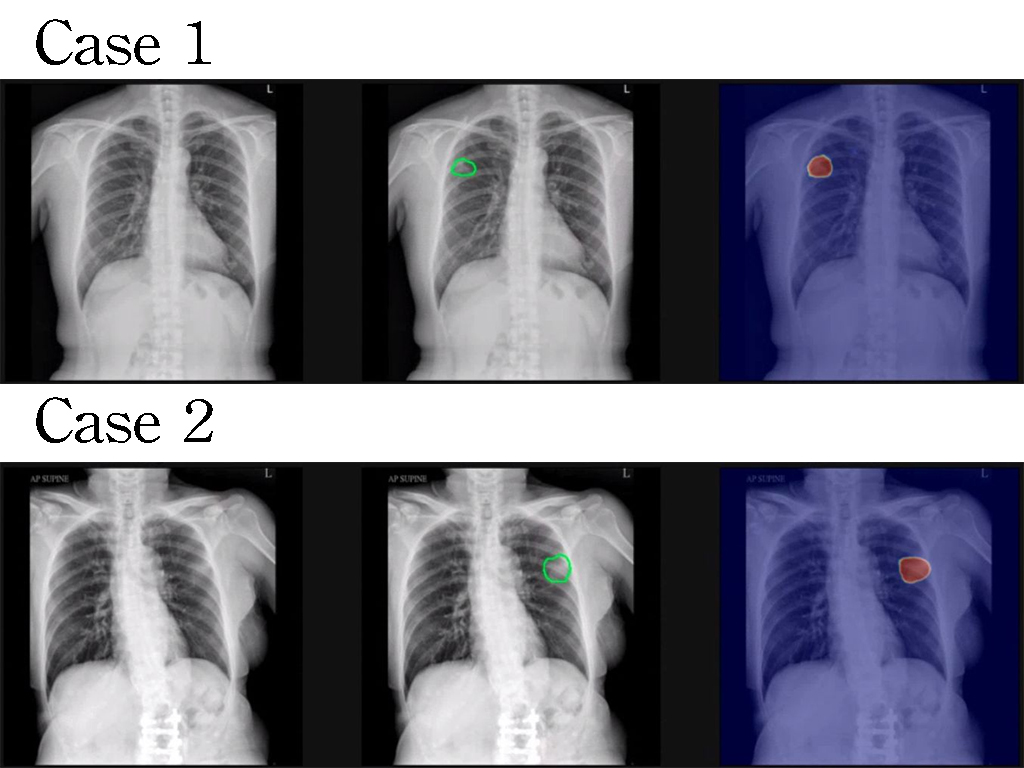

| Summary | Our team is dedicated to developing powerfuleconomical AI accelerator chip. We have built TAIMTAQ, a hardware accelerator specifically for Transformer model. Our accelerator can run object detection model, image segmentation model,even natural language model. We have achieved great progress in terms of computation power, energy efficiency,miniaturization. Our overall performance surpasses the SpAtten architecture proposed by MITA3 architecture proposed by Seoul University. |

||

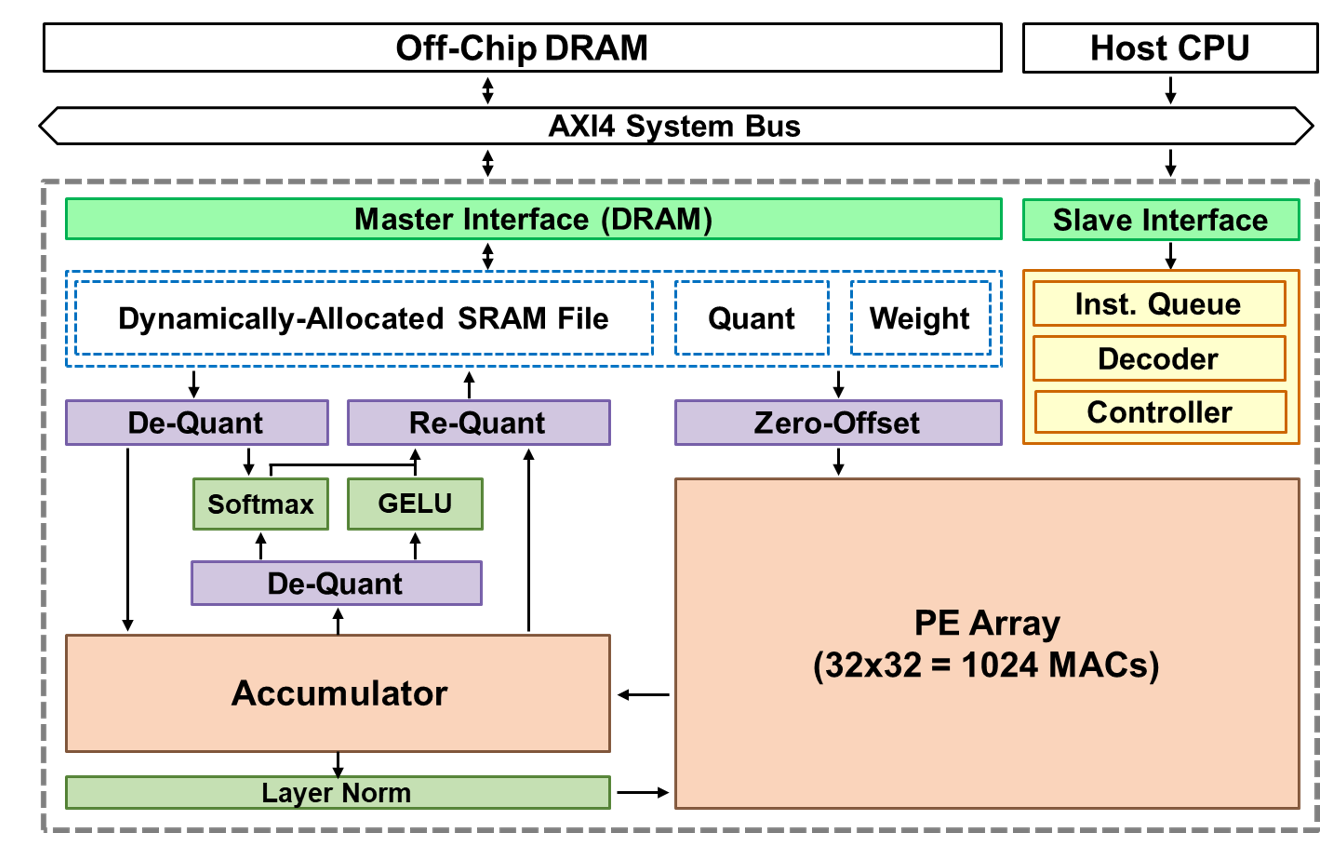

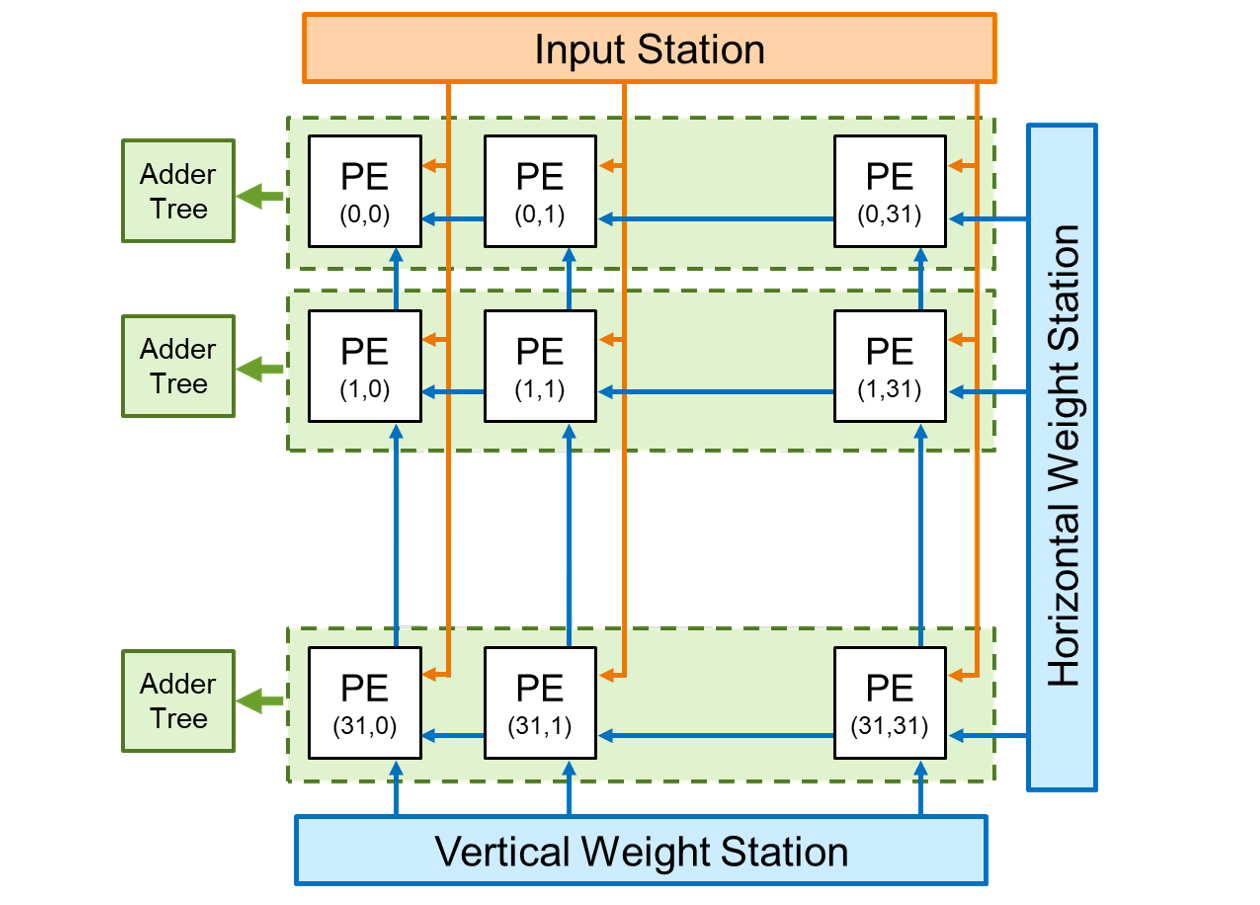

| Scientific Breakthrough | Our team propose a novel strip-based matrix decompositionHorizontal-Vertical dataflow. We adopt a dynamic allocation routine of SRAM cache to reduce data bandwidth requirement. Our accelerator includes neatly-crafted computation modules for calculating non-linear function. We support INT8 quantization natively with hardware. TAIMTAQ reaches 96.4 utilization rate when running Meta's DeiT-Small model. Accuracy drops less than 1 when running the INT8 version compared to FP32 result. |

||

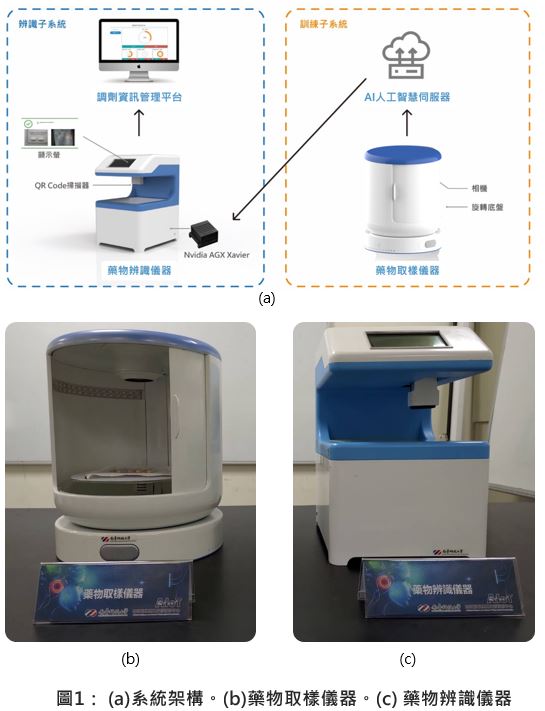

| Industrial Applicability | In a few short years, ChatGPTother Transformer-based LLMs have achieved spectacular results. Instead of having to invest massive capitalconstruct expensive data centers, now TAIMTAQ can greatly reduce the costenergy of deploying AI chatbot on edge devices, creating more opportunities for applications. Meanwhile, storing AI on edge can effectively ensure the privacycybersecurity of its user. Our vision is that one day, every person can have hisher very own AI. |

||

| Keyword | Artificial Intelligence Deep Learning Transformer Model Language Model Object Detection Edge Computing Low-latency Computing Hardware Accelerator Private AI Quantization | ||

- Contact

- HAO-CHUN CHOU

- hcchou.ee11@nycu.edu.tw

other people also saw