| 技術名稱 | TAIMTAQ : 高效能Transformer邊緣運算加速器晶片 | ||

|---|---|---|---|

| 計畫單位 | 國立陽明交通大學電子研究所 | ||

| 計畫主持人 | 黃俊達 | ||

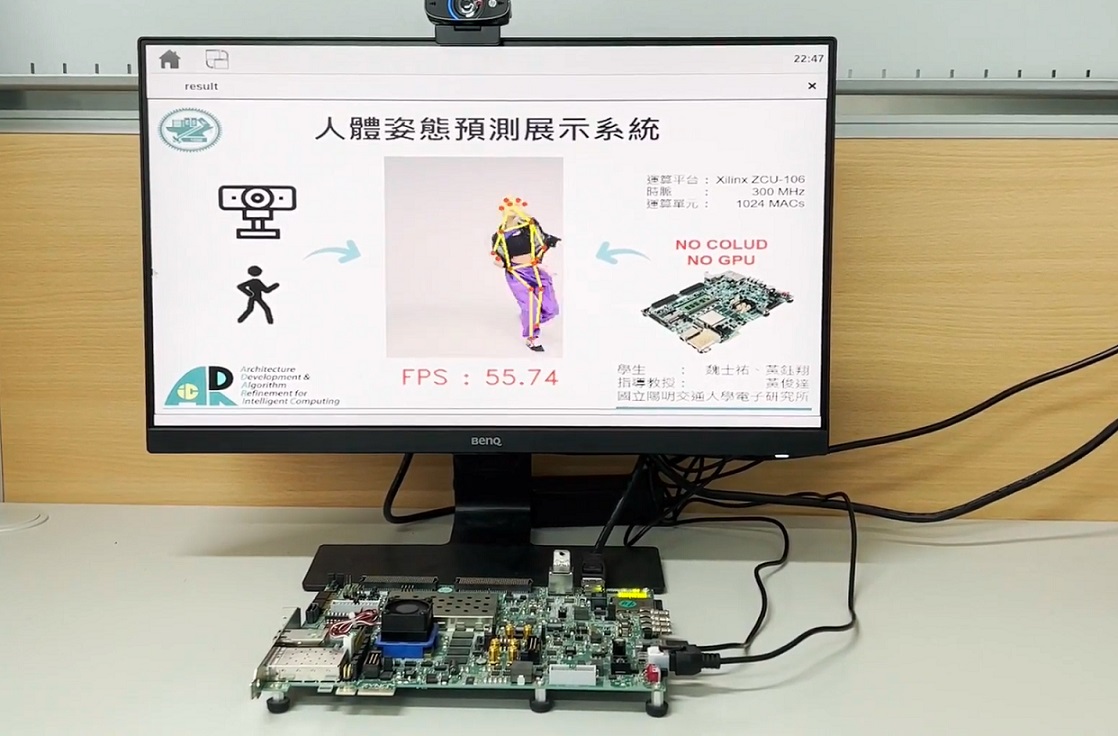

| 技術簡介 | 本團隊致力於研發精悍而實惠的AI加速器晶片。我們為現今發展最廣泛的Transformer架構量身打造了通用型的硬體加速器TAIMTAQ,可搭載物件追蹤模型、影像分割模型,甚至是語言生成模型。我們開發的晶片在運算量、能效、面積方面皆有長足的進步,表現更勝於麻省理工的SpAtten以及首爾大學的A3。 |

||

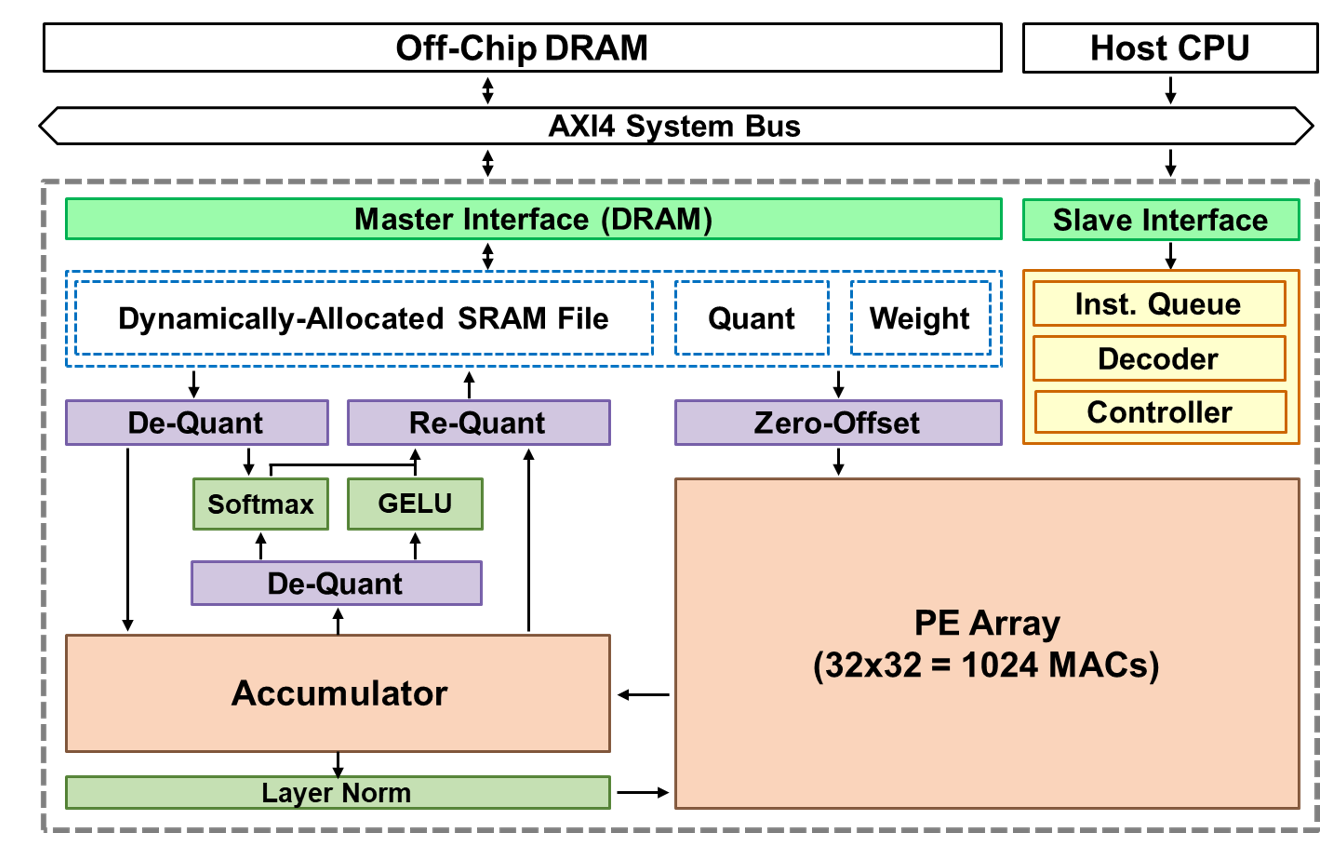

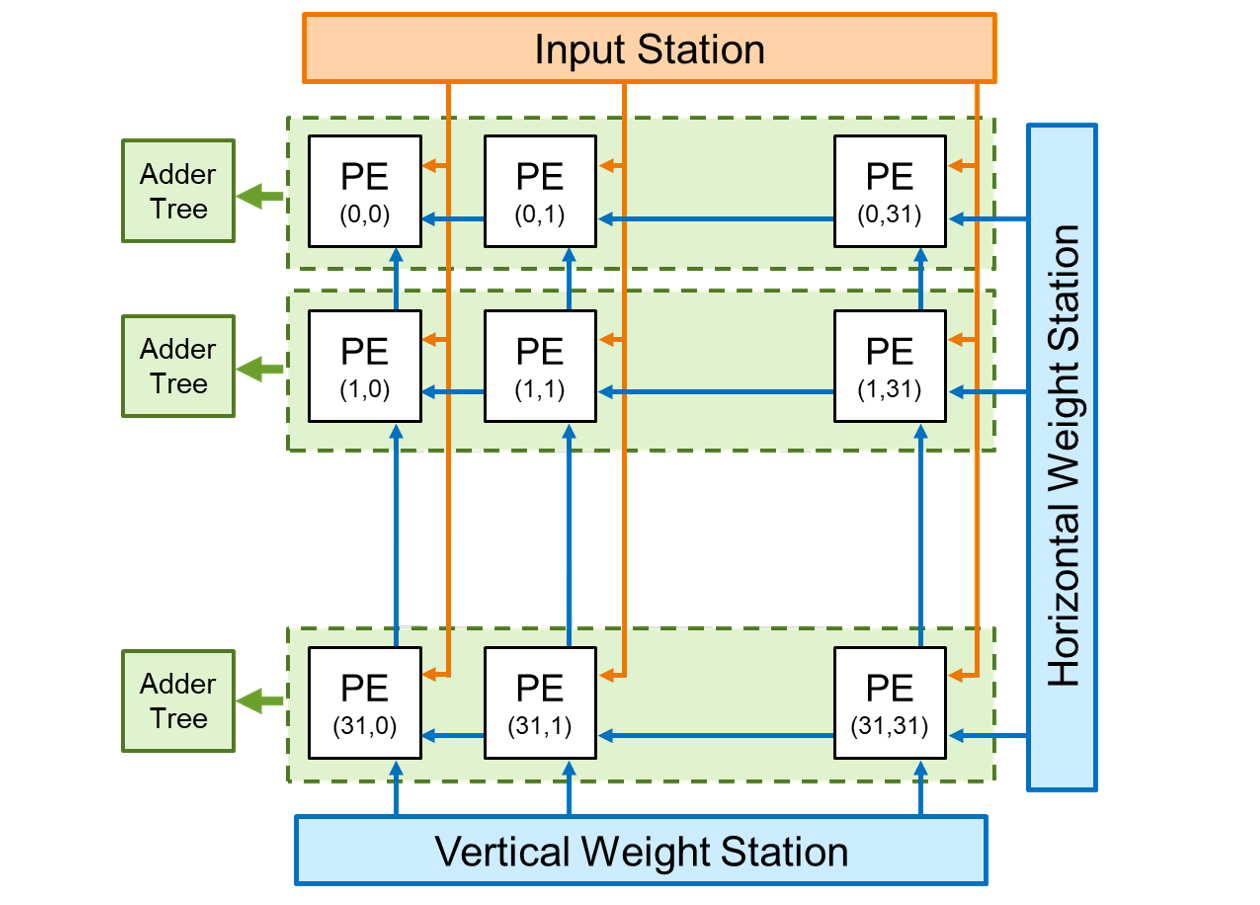

| 科學突破性 | 本團隊提出了更加細緻的條狀矩陣拆解以及水平垂直雙資料流模式,並採用動態調配的SRAM快取來減少資料流動量。我們以精簡的運算單元實踐了非線性函數,同時用內部硬體支援非對稱的INT8參數量化。TAIMTAQ在運行DeiT-small視覺模型時可達到96.4硬體運用率,同時維持精確率下降不到1。 |

||

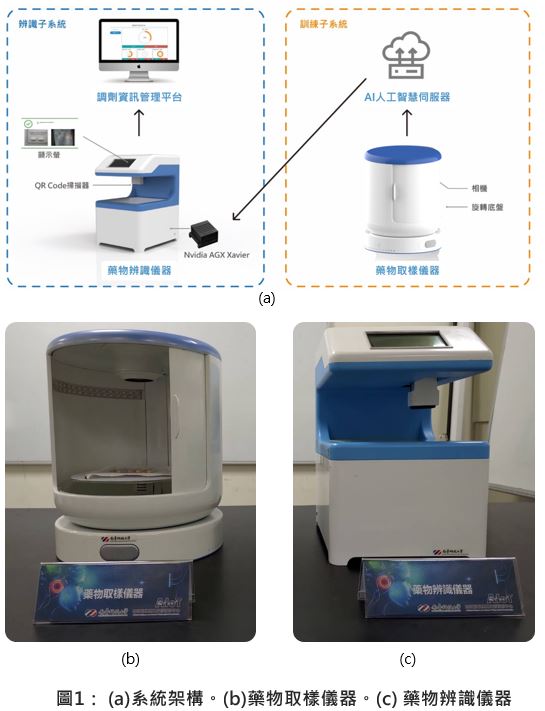

| 產業應用性 | 在短短數年間,以Transformer架構為骨幹的ChatGPT和大型語言模型取得飛躍性的發展。有別於過去需要斥巨資建置雲端運算伺服器,現在TAIMTAQ可大幅降低在邊緣端佈署對話式AI的成本及能耗。同時,邊緣端AI可有效確保使用者的資料隱私與安全。我們的目標,就是要讓每個人都能擁有屬於自己的AI。 |

||

| 關鍵字 | 人工智慧 深度學習 Transformer 模型 語言模型 物件追蹤 邊緣運算 低延遲運算 硬體加速器 自有化AI 數值量化 | ||

- 聯絡人

- 周浩鈞

- 電子信箱

- hcchou.ee11@nycu.edu.tw