| Technical Name | Virtual Guidance for Robotic Navigation | ||

|---|---|---|---|

| Project Operator | National Tsing Hua University | ||

| Project Host | 賴尚宏 | ||

| Summary | Real-world applications including robot vacuums, tour-guide robots and delivery robots employ autonomous navigation systems as the core function. However, constructing such a system could be costly, as such systems typically incorporate high-cost sensors such as depth cameras or LIDARS. We present an effective, easy-to-implement, and low-cost modular framework for completing complex autonomous navigation tasks. |

||

| Scientific Breakthrough | 1. A straightforward, easy to implement, and effective modular learning-based framework for dealing with the challenging robot navigation problem in the real world. |

||

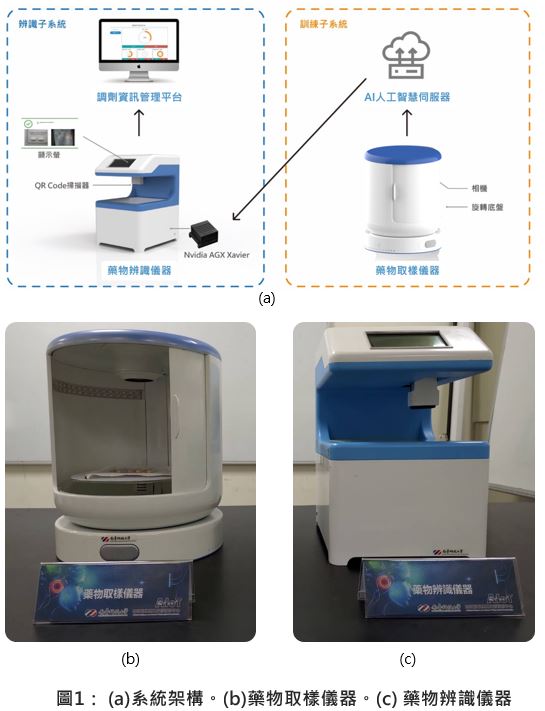

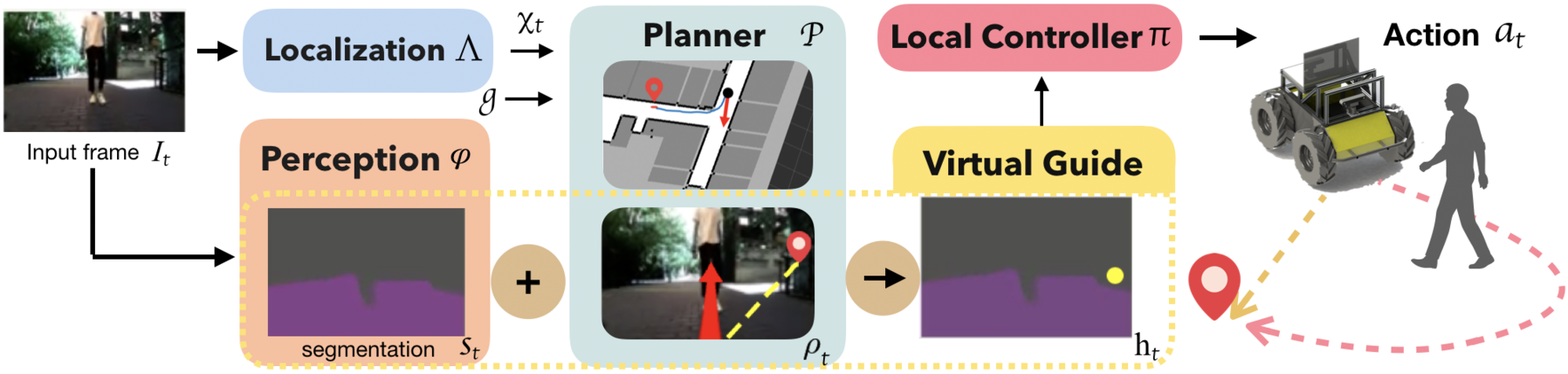

| Industrial Applicability | We propose a new modular framework for addressing the reality gap in the vision domain and navigating a robot via virtual signals. Our robot uses a single monocular camera for navigation, without assuming any usage of LIDAR, stereo camera, or odometry information from the robot. The proposed framework consists of four modules: a localization module, a planner module, a perception module, and a local controller module. In the proposed framework, the role of the virtual guide is similar to a carrot (i.e., a lure) for enticing the robot to move toward a specific direction. Compared to conventional navigation approaches, our methodology is not only highly adaptable to diverse environments, but is also generalizable to complex scenarios. |

||

| Keyword | Intelligent Robotics Computer Vision Semantic Segmentation Virtual-to-Real Transfer Learning Simultaneous Localization and Mapping (SLAM) Deep Reinforcement Learning Training Methodology in Virtual Environments Construction of Virtual Environments Parallel Embedded Cluster Virtual Guidance Methodology for Robotics | ||

- cylee@cs.nthu.edu.tw

other people also saw