| Technical Name | Vanishing Node: Another Phenomenon That Makes Training Deep Neural Network Difficult | ||

|---|---|---|---|

| Project Operator | National Taiwan University | ||

| Project Host | 林宗男 | ||

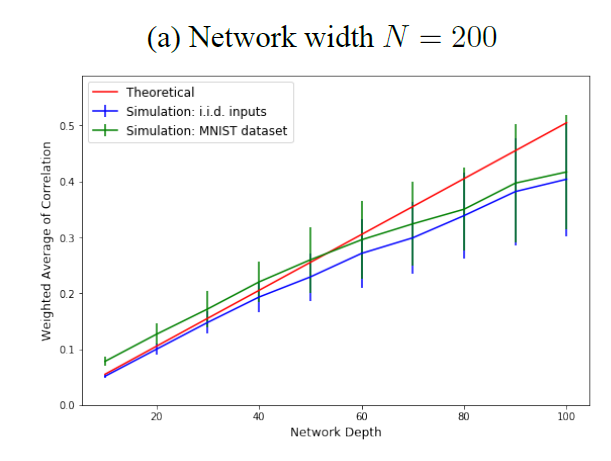

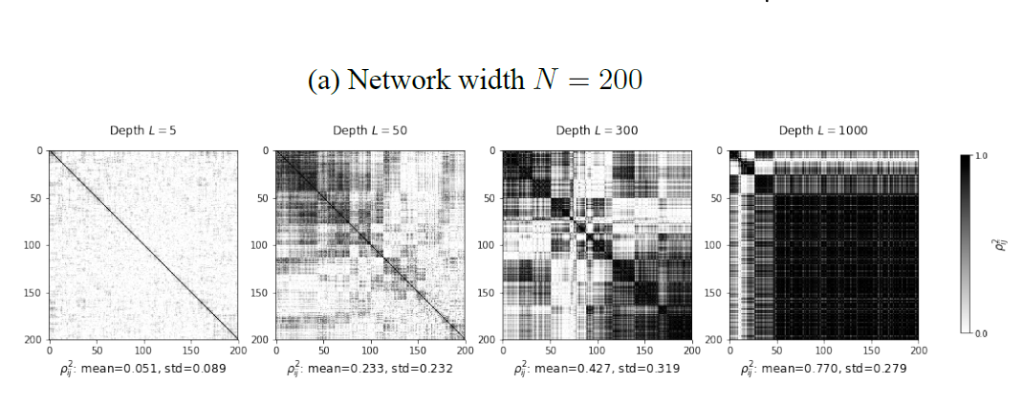

| Summary | We show a phenomenon that increases the difficulty of training deep neural networks. As the network becomes deeper, the similarity of hidden nodes increases, hence the expressive power of the network vanishes as the network deep. We call this problem "Vanishing Nodes." This behavior of vanishing nodes is shown analytically to be proportional to the network depth and inversely proportional to the network width. |

||

| Scientific Breakthrough | It is well known that the problem of vanishing/exploding gradients creates a challenge when training deep networks. We show another phenomenon, called vanishing nodes, that also increases the difficulty of training deep neural networks. We show that vanishing/exploding gradients and vanishing nodes are two different challenges that increase the difficulty of training deep neural networks. |

||

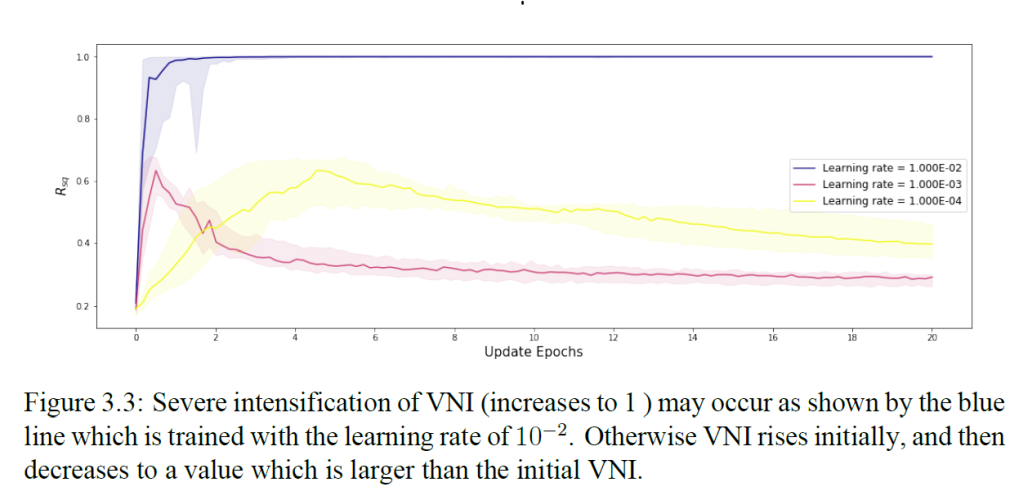

| Industrial Applicability | We propose a Vanishing Node Indicator (VNI) as the quantitative metric for vanishing nodes. The VNI can provide an indication to the vanishing of the expressive power of the deep neural network. The approximation of VNI is shown to be simply proportional to the network depth and inversely proportional to the network width. |

||

| Keyword | Deep learning neural network artificial intelligence learning theory statistical analysis vanishing gradient training problem expressive power correlation similarity | ||

- thl1213@ntu.edu.tw

other people also saw