| Technical Name | Unforgetting deep continual lifelong learning | ||

|---|---|---|---|

| Project Operator | Institute of Information Science, Academia Sinica | ||

| Project Host | 陳祝嵩 | ||

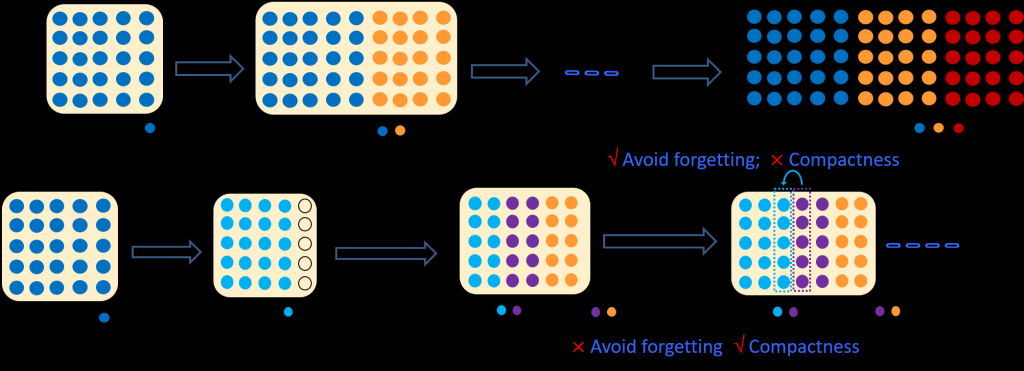

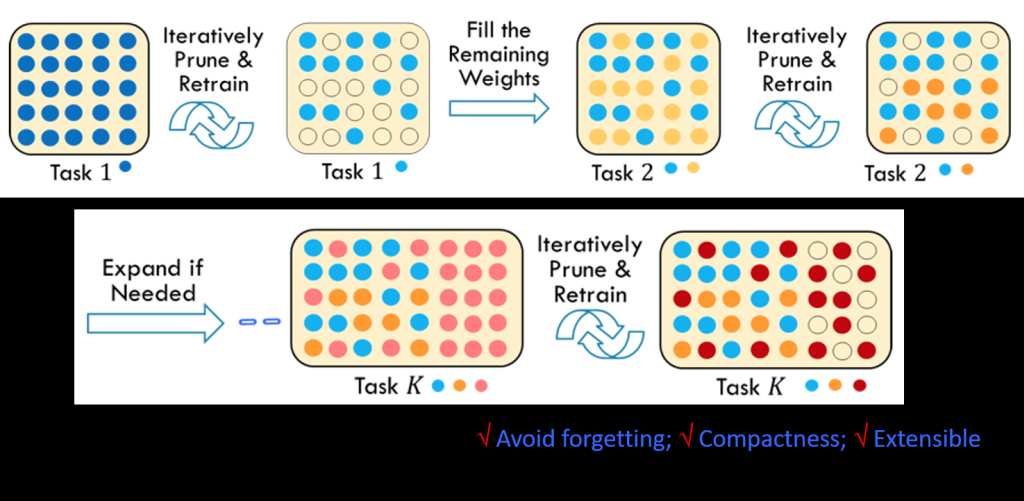

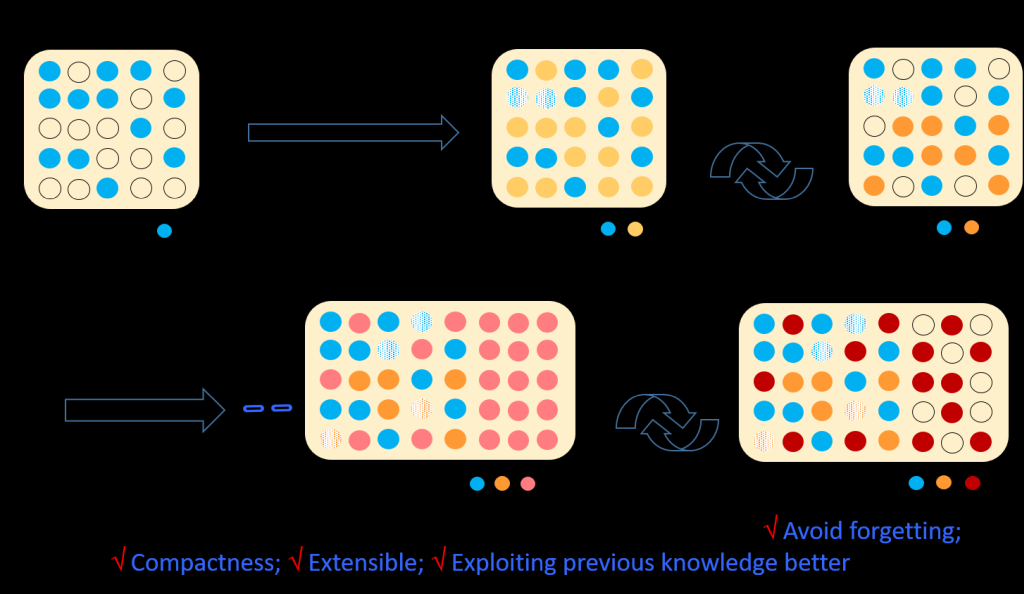

| Summary | We introduce a new technique for continual lifelong learning. Our method avoids forgetting and ensures the function mappings previously built to be exactly the same when adding new tasks. Our method allows expansion but keeps the compactness of the architecture, which can handle unlimited sequential tasks. It is sustainable and can reuse previous knowledge to yield better performance for new tasks. These characteristics reveal its practical usefulness. |

||

| Scientific Breakthrough | Current deep lifelong learning methods suffer from forgetting or need large data reproduced for re-training, which is difficult to be used in practice. Our technique avoids these restrictions and is practically useful. It ensures unforgetting and maintains the model compactness when growing, which is sustainable and can also exploit previous knowledge to yield better performance for new tasks. |

||

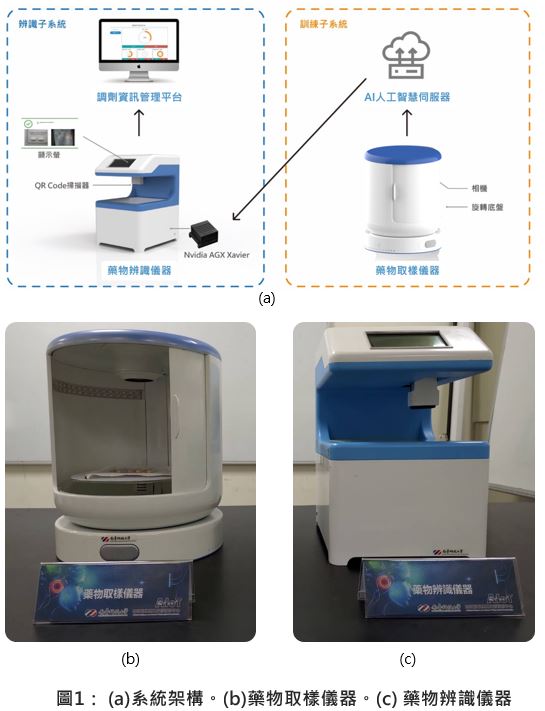

| Industrial Applicability | Many companies are incorporating AI into their systems. When well-trained deep-learning models are deployed on devices, they are difficult to be adapted for new data due to catastrophic forgetting. Our technique can continually fine-tune and transfer knowledge and skills to adapt the model for new tasks, whereas forgetting is totally avoided. It is useful for applications such as IoT, Industry, Smart Robut, and Multidia. |

||

| Keyword | artificial intelligence machine learning deep learning lifelong learning continual learning catastrophic forgetting incremental learning model compression model expansion multi-task learning | ||

- timmywan@iis.sinica.edu.tw

other people also saw