| Technical Name | Sparse Neural Network Learning | ||

|---|---|---|---|

| Project Operator | National Taiwan University | ||

| Project Host | 林守德 | ||

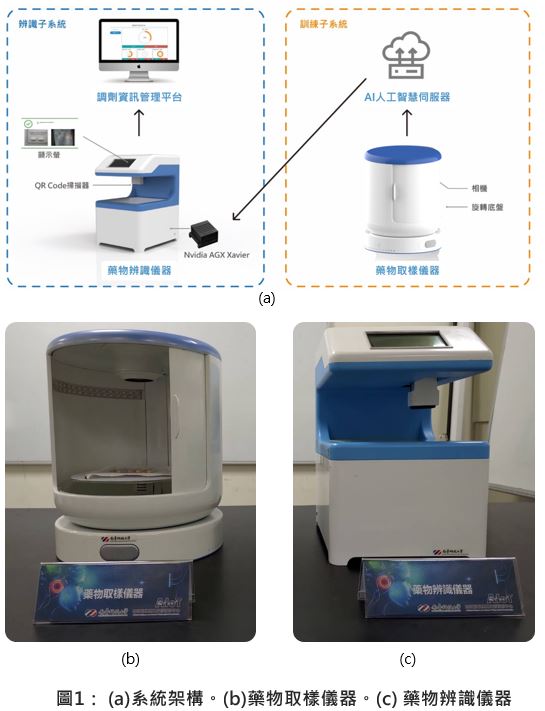

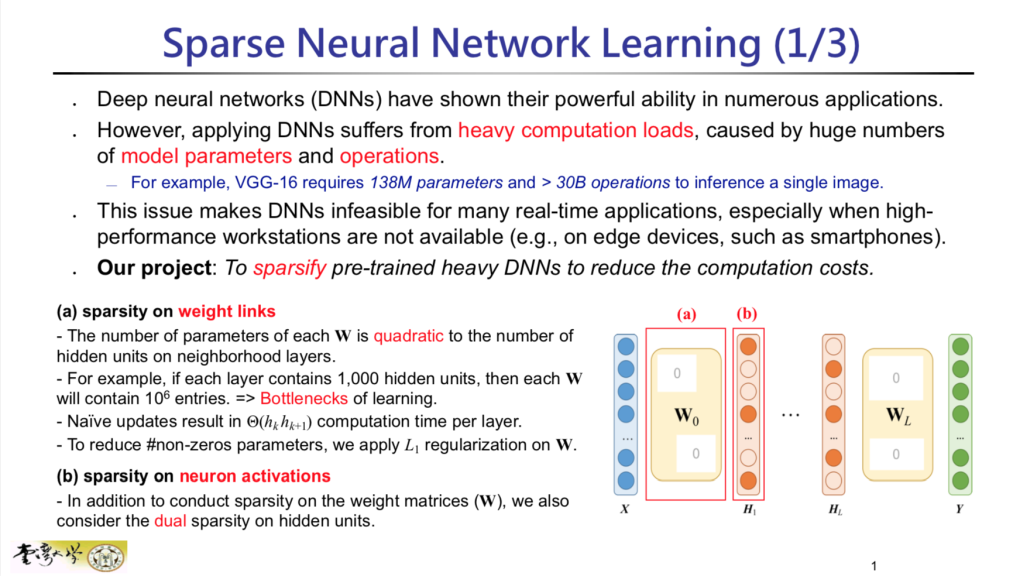

| Summary | A trained deep learning model usually contains millions or even trillions of parameters, which can prohibit it from being executed on lightweight end-devices such as IoT sensors or mobile devices due to the constraint of memory or storage. To addresses the issue, we develop a novel sparse learning technique that not only reduces the computational cost, but also retains the original model performance. |

||

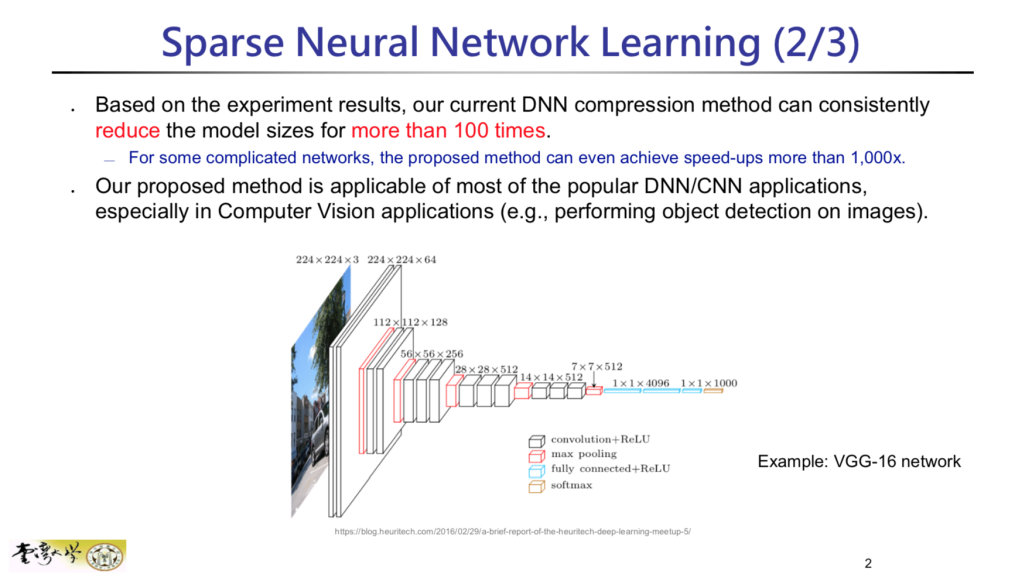

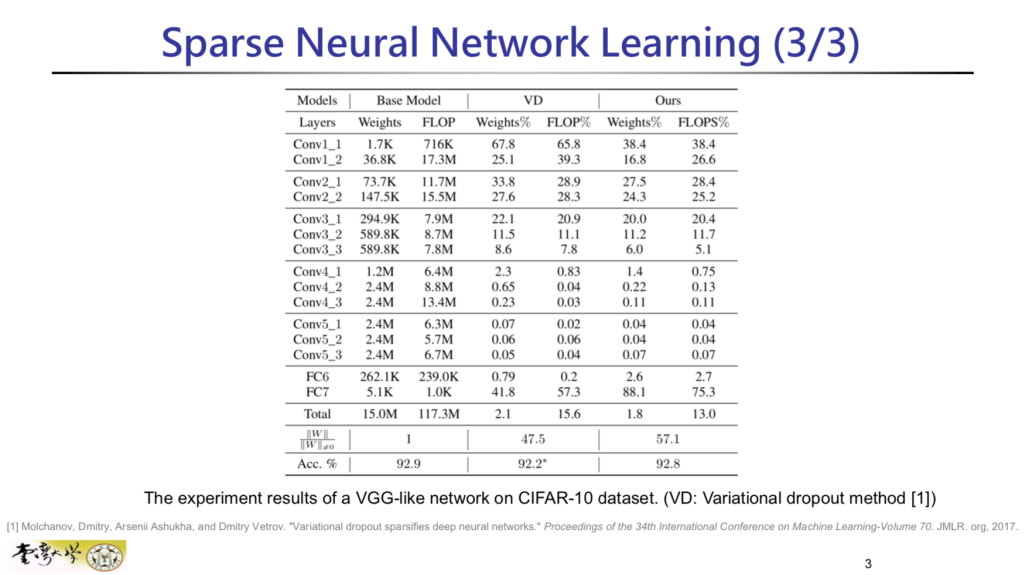

| Scientific Breakthrough | To tackle the issue of high computational costs, we conduct L1-regularization technique to sparsify deep neural networks. With the designated L1-regularized objective function, we develop a novel algorithm for the optimization. Based on the current experiment results, the proposed method can massively reduce the number of parameters of commonly used networks (e.g., VGG networks). |

||

| Industrial Applicability | Our sparse neural network learning method can be deployed on various applications. The computational cost of model inferences on deep neural networks can be massively reduced and it can be beneficial to process neural network applications on IoT edge-devices and personal mobile devices. |

||

| Keyword | Machine Learning Deep Learning Sparse Models Edge AI AI Applications Video Data Analysis IoT Artificial Neural Network Resource Constrained Situation Big Data Mining | ||

- d05922017@ntu.edu.tw

other people also saw