| Technical Name | Hybrid CNN Accelerator System Design and the Associated Model Training/Analyzing Tools | ||

|---|---|---|---|

| Project Operator | National Chiao Tung University | ||

| Project Host | 郭峻因 | ||

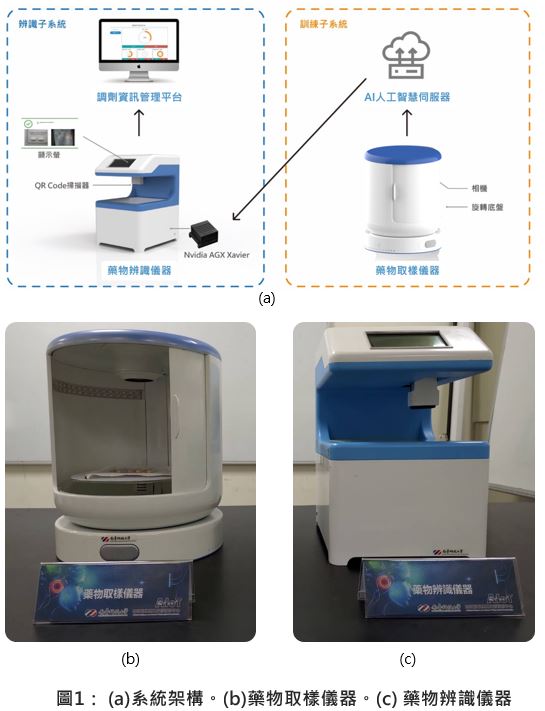

| Summary | Hybrid CNN DLA system |

||

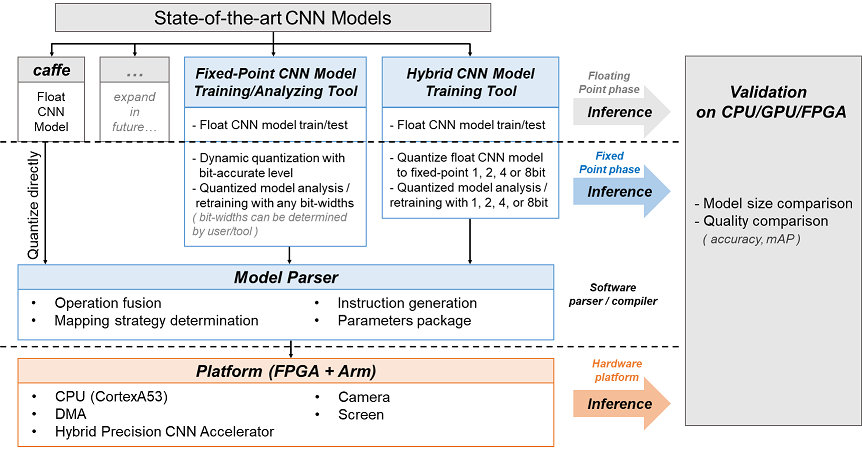

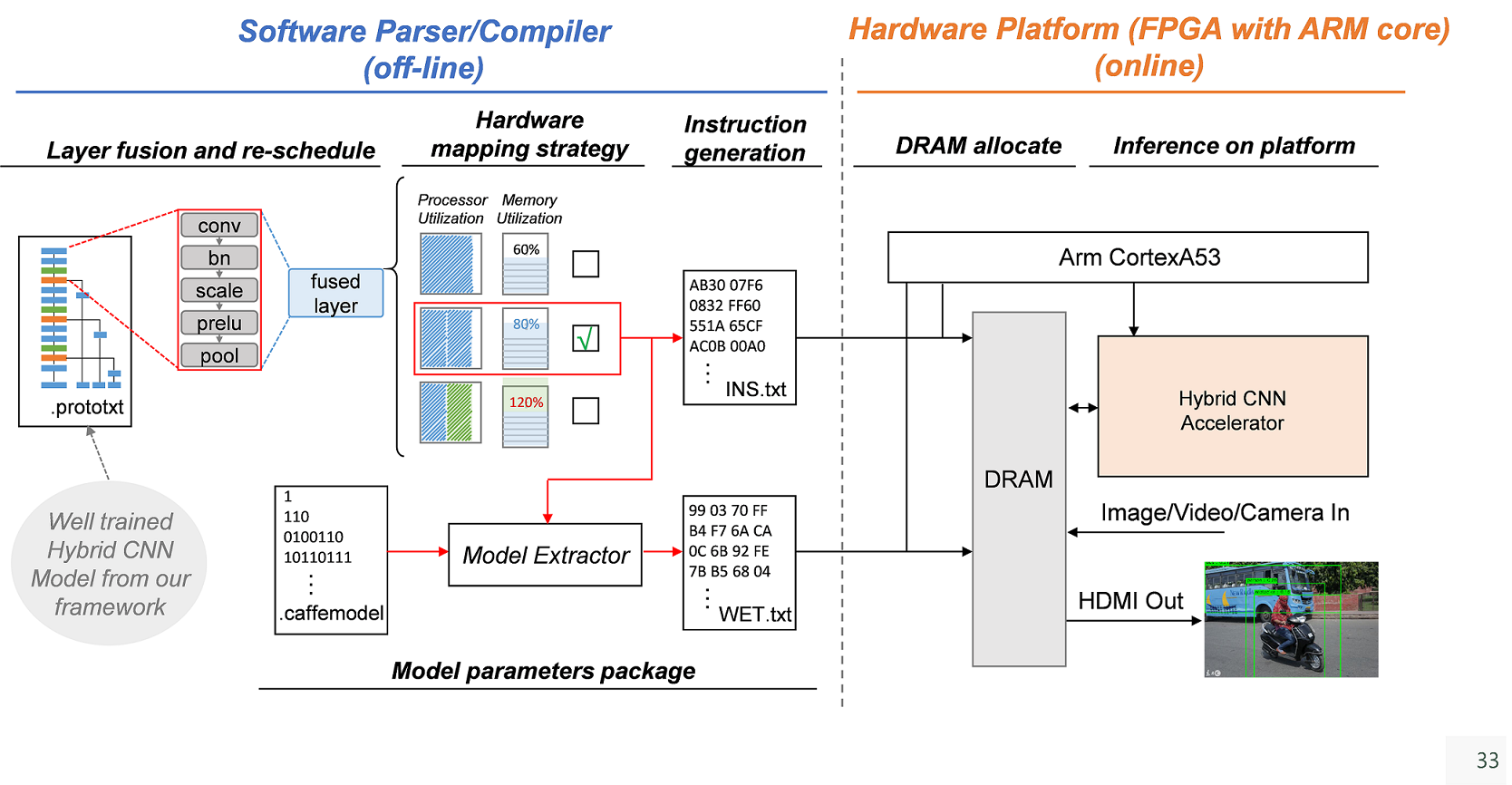

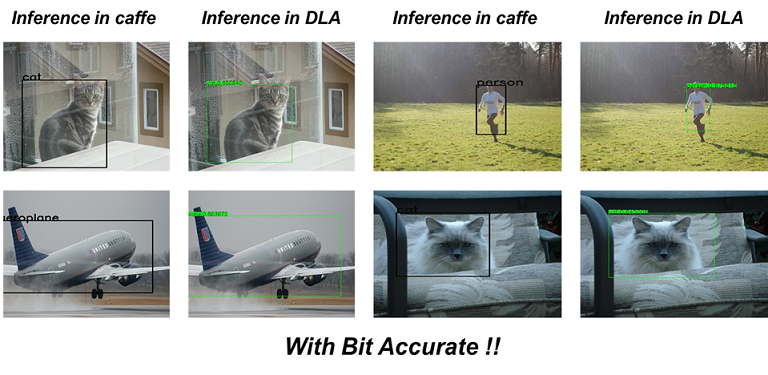

| Scientific Breakthrough | 1.1.The Hybrid CNN DLA proposed by this work can support 1/1, 2/2, 4, and 8/8bit CNN operations. |

||

| Industrial Applicability | For the industrial applications, our Hybrid CNN model training tool can reduce the model size efficiently. Then this model can inference in higher speed by our customized DLA. The software tool can be used to CNN model training and the model re-training. And we can provide the corresponding DLA to inference the model in edge application. This solution can avoid quantization error when floating-point model porting to other edge device. |

||

| Keyword | Training tool for Hybrid fixed point CNN Model Dynamic Quantization Liteweight Model Binary Model Hybrid Model Bit-Accurate Analysis Bit-Accurate Traning Deep Learning Quantization Quantization Analysis | ||

- apple.35932003@gmail.com

other people also saw