| Technical Name | Markov Recurrent Neural Networks | ||

|---|---|---|---|

| Project Operator | National Chiao Tung University | ||

| Project Host | 簡仁宗 | ||

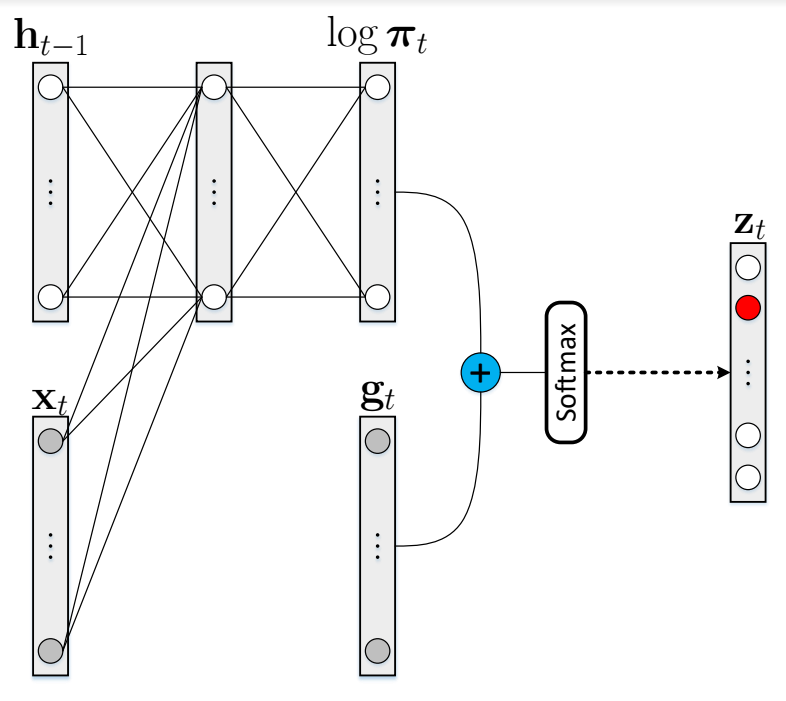

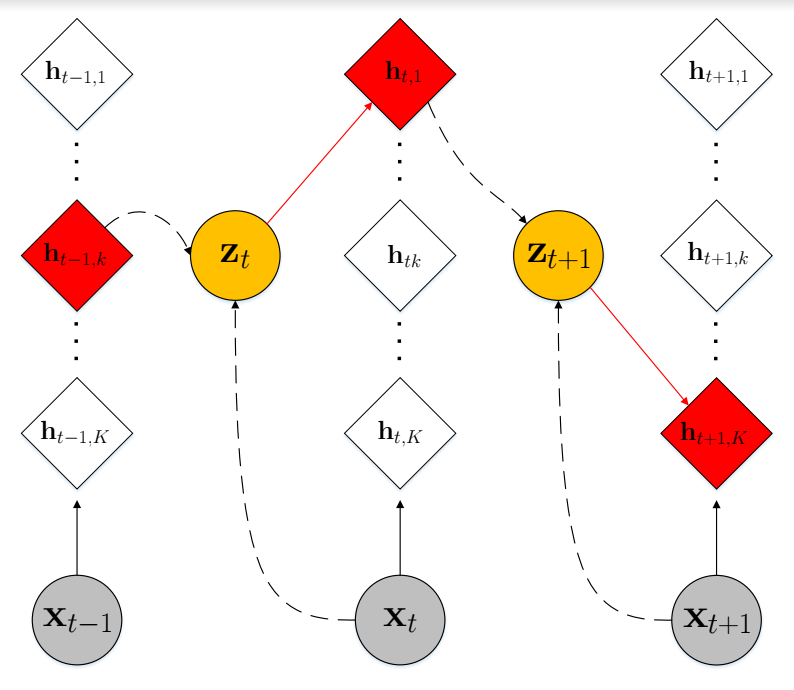

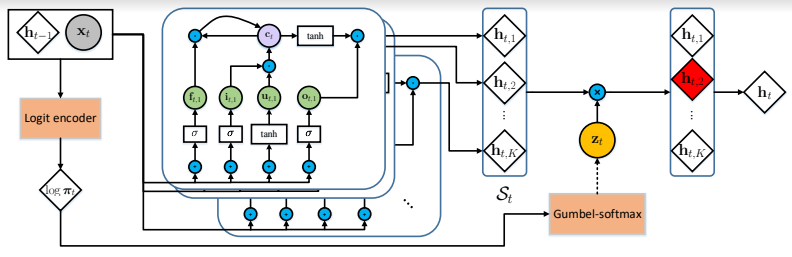

| Summary | MRNN introduces the Markov property into the hidden state of RNN and treats a sequence of hidden states as a Markov chain. This stochastic model follows a transition probability at each time step and encodes the sequential data with different transition functions. The complicated latent semantics and hierarchical sequential features are learned. MRNN does not only consider the flexible non-linear mapping in RNN but also the state transition with discrete random variable. |

||

| Scientific Breakthrough | We developed the stochastic transitions in recurrent neural networks by incorporating a Markov chain in a multi-path representation. In addition, Gumbel-softmax sampler was introduced to infer a random Markov state. Experiments on speech enhancement show that the stochastic states in RNNs can learn the complicated latent information under different number of Markov states. |

||

| Industrial Applicability | Natural language processing is one of the most important components of modern technology. There are some examples like machine translation, information retrieval, automatic question and answer. And about computer image recognition like license plate identification. It is said that it is necessary to calculate the motion state of each object in the image, the image restoration and reconstruction of the environment. |

||

| Keyword | Markov RNN Markov chain Gumbel-softmax discrete latent structure Deep learning recurrent neural network deep sequential learning Source separation stochastic transition latent variable model | ||

- jtchien@nctu.edu.tw

other people also saw