| Technical Name | Learning Deep Disentangled Representation for Cross-Domain Image Generation and Recognition | ||

|---|---|---|---|

| Project Operator | National Taiwan University | ||

| Project Host | 王鈺強 | ||

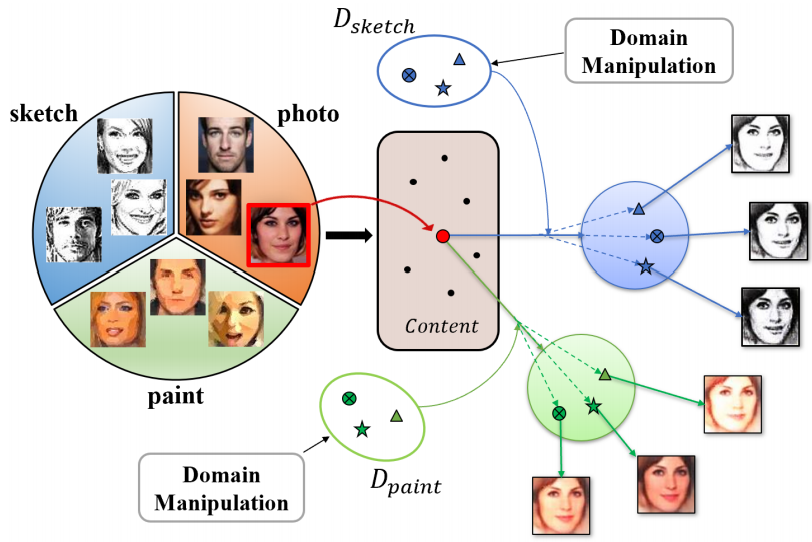

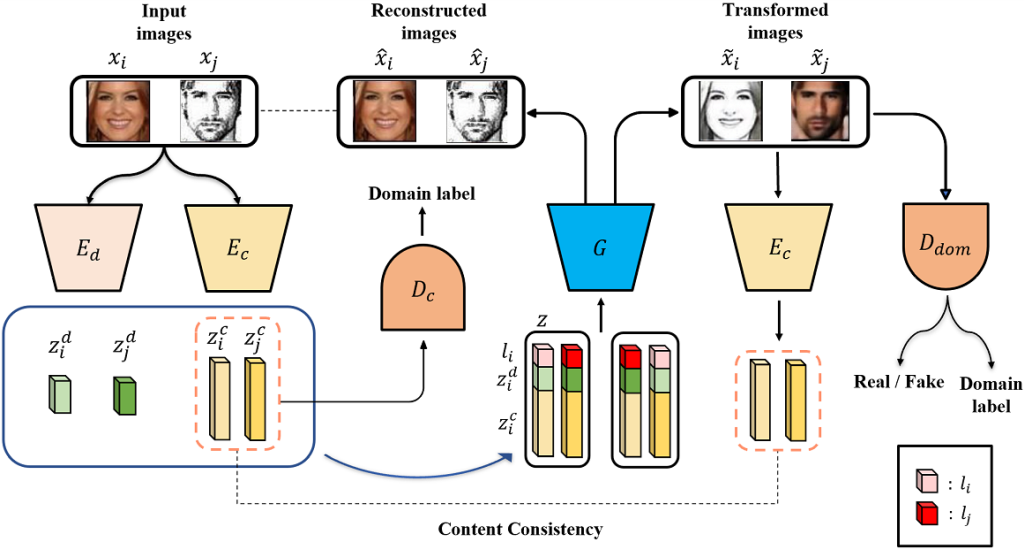

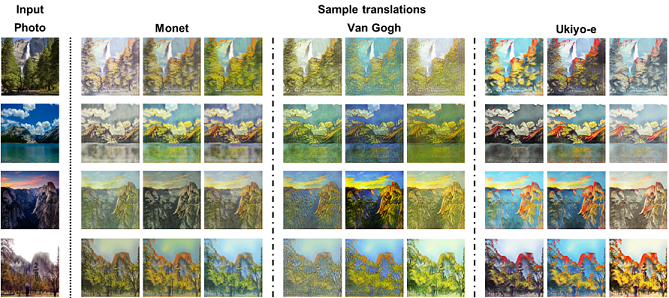

| Summary | Representation disentanglement aims at learning interpretable representation, which can be manipulated to analyze cross-domain visual data. By advancing adversarial learning and disentanglement techniques, our model learns domain-invariant representation with the associated domain-specific ones. Through our technique, we tackle cross-domain image generation and recognition simultaneously. |

||

| Scientific Breakthrough | 1.Our technique factorizes latent features into disjoint features describing domain-invariant and specific information. |

||

| Industrial Applicability | The applications of our technique are related to transfer learning scenarios, and the most potential application is self-driving car. Through our technique, we are able to transfer the knowledge learning from one city or even simulated data to another city without labeled training data to reduce the expensive annotating cost and benefit to the industries devoted to self-driving car. |

||

| Keyword | Machine learning Deep learning Computer vision Transfer learning Domain adaptation Representation learning Image-to-image translation Style transfer Image editing Self-driving car | ||

- frs106@ntu.edu.tw

other people also saw